Published Date :

The Sreenidhi Institute of Science and Technology (or SNIST) is a technical institute located in Hyderabad, Telangana, India. It is one of the top colleges in Telangana. The institution is affiliated to the Jawaharlal Nehru Technological University, Hyderabad (JNTUH). In the year 2010-11, the institution attained autonomous status and it is the first college under JNTUH to get that status.[1][2] The institution is proposed to get Deemed university status from the year 2020-21.

SNIST was established in 1997 with the approval of All India Council for Technical Education, Government of Andhra Pradesh, and is affiliated to Jawaharlal Nehru Technological University, Hyderabad. SNIST is sponsored by the Sree Education Society of the Sree Group of Industries.

It runs undergraduate and postgraduate programs and is engaged in research activity leading to Ph.D. Sreenidhi is recognized by the Department of Scientific and Industrial Research as a scientific and industrial research organization.

The institution was accredited by NBA of AICTE within 5 years of its existence. It has received world bank assistance under TEQIP

The Challenge:

As Sreenidhi is a technical institute it can be understood the need of getting in close with students, parents etc. by online too. Education is required to be easily available. In this world where technology has taken control why not the education come closer to internet feeds.

At sreenidhi there was need of such solution where the application, web can be managed without any infrastructure maintenance, less cost & monitoring.

While hosting the solution provided by AWS was quite considerable & affordable, which has given high availability, reliability & scalability.

So, the basic challenges were hosting platform, in cost effective way, high availability etc. And AWS meets all the requirements.

The need of latency in milliseconds is fulfilled by Amazon Dyanamo DB.

Why Amazon Web Services

Migrating to AWS to Host a Popular Website and Analyze App and Web User Data

Amazon web Services (AWS) help in following different ways to get the job done. For the website hosting & other needs the serverless solution was designed with following things.

AWS provides scalable and flexible services leveraging different technologies that allow developers to build applications for any business needs. In this case we’re using the following serverless services to showcase how we can work with different technologies in the same application, focusing on security and business logic instead of the infrastructure to maintain and operate the different services

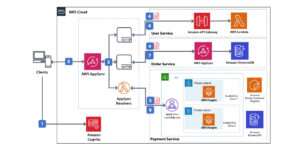

Following is the architecture used to host the application.

AWS AppSync is a managed service that uses GraphQL to make it easy for applications to get exactly the data they need by letting you create a flexible API to securely access, manipulate, and combine data from one or more data sources.

The Amplify Framework allows developers to create, configure, interact, and implement scalable mobile and web apps powered by AWS. Amplify seamlessly provisions and manages your mobile backend and provides a simple framework to easily integrate your backend with your iOS, Android, Web, and React Native frontends. Amplify also automates the application release process of both your frontend and backend allowing you to deliver features faster.

AWS Amplify Console provides a Git-based workflow for deploying and hosting full stack serverless web applications.

AWS Amplify CLI is a unified toolchain to create, integrate, and manage the AWS cloud services for your app.

Amazon API Gateway is a fully managed service that makes it easy for developers to create, publish, maintain, monitor, and secure REST APIs at any scale.

AWS Fargate is a serverless compute engine for containers that works with both Amazon Elastic Container Service (ECS) and Amazon Elastic Kubernetes Service (EKS).

AWS Lambda lets you run code without provisioning or managing servers. You pay only for the compute time you consume. With Lambda, you can run code for virtually any type of application or backend service.

Amazon Cognito is a user management service with rich support for user’s authentication and authorization. You can manage those users within Amazon Cognito or from other federated IdPs.

Amazon Document DB is a key-value and document database that delivers single-digit millisecond performance at any scale.

These are the several benefits provided by AWS hence it is the first choice.

The Benefits

Descriptive Architecture:

This current functioning architecture gives the brief idea about the benefits offered by AWS managed services

- Scales to meet the demands of students globally

- It provides AWS cognito for user authentication & authorization.

- Amazon Dynamo DB to provide single digit millisecond latency response.

- AWS Lambda, which is serverless solution, it is event trigger service. Pay for the compute time only.

- AWS Amplify Console provides a Git-based workflow for deploying and hosting full stack serverless web applications. Etc.

we have ensured that CloudTrail should be enabled in all the AWS Regions so that services related logs will be saved in amazon S3 bucket and bucket has versioning enabled. with necessary safety measure in this project we have implemented AWS CloudTrail will help to track down the all the activity that are ongoing into the AWS account. We have configured it with AWS S3 so all the logs that are being track that all will stored under the AWS S3 bucket and also, we have enabled KMS over there. if someone gets that access to that bucket but he should not able to read all the logs from CloudTrail.

we have ensured that Workload health metrics are collected & analyzed. The is given the monitoring system guidelines so that continuous monitoring is possible. Workload metrics are always been monitored with the help of AWS CloudWatch to see if necessary, changes required. With help of CloudWatch matrix we used monitor the workload. Also, we have used some third-party tools like Nagios to monitor the client infrastructure and ELK for dashboard management so we can easily track the workload of client application. using ELK, we used to get logs and Cloud watch and Nagios for monitoring, also we have some install CloudWatch agent on server so we can get customer memory information and other information.

We have used version control system (Git) so client code resided in Git and he want to deploy java-based application on AWS. For make that happed we have created some Deployment scripts that help us to deploy the code and application over AWS. We have created AWS CloudFormation templets so that we can create infrastructure as needed. also, we have implemented AWS code, build and code pipeline for CICD and integrated that with Git version control system.

CloudTrail is enabled & the related logs are saved in amazon S3 with necessary safety measure. We have used AWS service call AWS config for getting all the information about resources being used in this project account so that will help us to get better understanding of how AWS resources are used and how many. We need to manage the logs, troubleshooting the server, networks, application. we need to troubleshoot workload and errors that are being captured in application. also, we need to give solution on that. client has one application which is hosted on ELB and that application health was not good due to ELB was not getting traffic from internet and security group was not properly opened so we trace that issue and help them to correctly configure the application. also, we have created some scripts so script will get logs and memory information and stored in on AWS s3 and then we need to find out the exact issue that application was facing with.

Role based policy applied to each service necessary. We have followed Rules and policy that AWS has for APN partner and their customers. For example, if any user wants S3 bucket access so first we validate the user types over there and then we used to give the access to user want s3 access so we used to give him only read only access so he could only read the bucket.

Data that traverse through is encrypted. SSL/TLS used for the data security. As per the client need, we have used AWS CM service to store the Certificates and privates’ keys that will used. About the end-point security we have used SSL/tls layer of security here to get client environment secure as per their requirement. we have used ELB and behind that ELB we have deployed some ssl certificates over AWS. As the client need to do any data transmission so we have used VPC end-point over there to make all the activity private so connection will get secure over VPC.

All the keys are secure with AWS KMS. The encryption keys are managed securely. The AWS IAM is used to distribute & manage the privet keys. Mostly we are using ACM for ssl/tls certificate where client has some domain names and he want to map that domain to the route53 and it has load balancer that should have ssl enable so usually we refers ACM for doing that. and for Crypto we are using KMS for encryption.

we have ensured that Granular item recovery: A company attorney accidentally deletes a time sensitive email, then empties the contents of the Trash folder. Since Microsoft Exchange is a business-critical application for this busy company, IT continuously backs up delta level changes in Exchange. And since their backup application is capable of granular backup and recovery, they can recover the individual message within an RTO of 5 minutes instead of restoring an entire VM for a single message. Ecommerce site: A retail store’s self-hosted e-commerce site uses three different databases: a relational database storing the product catalog, a document database that reports historical order data, and an API database connecting to their payment processor’s gateway. The document database can reconstruct data from other databases so its RTO and RPO are within 24 hours. The RPO & RTO provided in case of individual failure or the any other major Disaster. Based on the current architecture. The company replicates the few changes it makes during the week to their provider’s DR platform. The API database holds ordering information and needs both RPO and RTO in seconds. IT continuously replicates data to the failover site, which immediately takes over processing should the API database go down. For example, if you have a 4-hour RPO for an application then you will have a maximum 4-hour gap between backup and data loss. Having a 4-hour RPO does not necessarily mean you will lose 4 hours’ worth of data. Should a word processing application go down at midnight and come up by 1:15 am, you might not have much (or any) data to lose. But if a busy application goes down at 10 am and isn’t restored until 2:00 pm, you will potentially lose 4 hours’ worth of highly valuable, perhaps irreplaceable data. In this case, arrange for more frequent backup that will let you hit your application-specific RPO.

Amazon Dynamo DB is important part of the workload here as explained in the architecture diagram which meets the requirement of being available highly. Low latency & high speed. In the architecture given the DynamoDB is used for the order service i.e. OLTP. Also, it is engaged with payment service in a given VPC. The product manager of metrosaga said it was not the easier job to keep visitor stick up to the content as every user leaves the site within a few minutes of watching the news or any other blog content. They wanted to minimize the unnecessary expenses on the. DynamoDB transactions help our Architect atomicity, consistency, isolation, and durability (ACID) across one or more tables within a single AWS account and region. we can use transactions when building applications that require coordinated inserts, deletes, or updates to multiple items as part of a single logical business operation. DynamoDB is the only non-relational database that supports transactions across multiple partitions and tables. We have written some batch operations that would help us to achieve our goal. TransactWriteItems, a batch operation that contains a write set, with one or more PutItem, UpdateItem, and DeleteItem operations. TransactWriteItems can optionally check for prerequisite conditions that must be satisfied before making updates. These conditions may involve the same or different items than those in the write set. If any condition is not met, the transaction is rejected.TransactGetItems, a batch operation that contains a read set, with one or more GetItem operations.

We have Considered few attributes to minimize the size of items written to the index and also Optimize Frequent Queries to Avoid Fetches, We ensured usage of the adaptive capacity feature to rebalance the partitions created as per the client need in order to reduce request throttling on the single partition which we were able to observe during the POC phase and we thought that making use of indexing will efficiently save space and cost and it will lead to performance upgrade. This isolation of frequently accessed items reduces the likelihood of request throttling due to your workload exceeding the throughput quota on a single partition. We ensured usage of secondary indexes along with an alternate key to support the application’s query operations. This has allowed the application to have access to multiple different query patterns. Considered few attributes to minimize the size of items written to the index. Optimize Frequent Queries to Avoid Fetches. We take it into consideration that DynamoDB adaptive capacity responds by increasing partition 4’s capacity so that it can sustain the higher workload of 150 WCU/sec without being throttled we took this all into, patterns. We took that into consideration that we can retrieve data from the index using a Query, the same way as we use Query with a table. A table can have multiple secondary indexes, If we try to concurrently create more than one table with a secondary index, we have taken all the necessary actions.

Need help with your cloud?

"No worries! Our experts are here to help you. Just fill the form and we'll get back to you shortly!"

Our Partners

+919356301699

+919356301699 hello@anetautomation.com

hello@anetautomation.com